Apple AirPods Live Translation: Complete Guide to Features & Alternatives

Apple's announcement of Live Translation for AirPods has sparked a wave of excitement. With just a double tap, your earbuds can now transform into real-time language translators; an idea that once felt like science fiction but is suddenly part of everyday tech.

But the question remains: is AirPods Live Translation the breakthrough we've been waiting for, or just another step in a much bigger journey towards universal communication? In this blog, we'll dive into what the feature offers, where it can shine, its limitations, and how it stacks up against dedicated translation tools already on the market.

Let's get started.

AirPods Live Translation: Supported Languages and Devices

On September 9, 2025, Apple announced its Live Translation feature for AirPods, which debuts with the AirPods Pro 3 and turns the earbuds into real-time language translators. By double-tapping the stems, users can activate translation mode, where foreign speech is recognized and instantly played back in their preferred language.

When both participants wear compatible AirPods, Live Translation delivers each person's speech directly in the other's language, privately and in real time. The earbuds momentarily lower surrounding noise to ensure clarity, creating a more natural flow of conversation compared to the one-sided setup.

If the other person isn’t wearing AirPods, Live Translation still keeps the conversation going. Your iPhone displays your words instantly in their language on screen, and if needed, it can also read them aloud, making sure the exchange remains clear and accessible.

Which languages does AirPods live translation support?

Here are the languages supported by the new live translation feature at launch:

- English

- French

- German

- Portuguese

- Spanish

Apple plans to expand support later in 2025, adding Italian, Japanese, Korean, and Simplified Chinese to this list.

Which AirPods models support live translation?

Live Translation debuts with the AirPods Pro 3, but Apple has confirmed it will also be available on:

- AirPods Pro 2

- AirPods 4 with Active Noise Cancellation

⚡ Note: All supported models require an iPhone running iOS 26 with Apple Intelligence enabled, since translations are processed on the iPhone rather than the AirPods themselves.

Top Use Cases of Apple AirPods Live Translation in 2025

Apple is positioning its live translation feature as more than just a novelty; it's designed to make everyday communication across languages smoother and more accessible. Below are the key scenarios where the feature is likely to shine:

Travel Made Easier

When visiting a foreign country, travelers can quickly understand directions and casual interactions without reaching for their phones. This hands-free translation makes navigating new environments feel less intimidating and more natural.

Smoother Conversations

In one-on-one exchanges, Live Translation bridges the gap by letting each person hear speech in their own language. The private playback through AirPods ensures the conversation flows more naturally than relying on a phone screen.

Productive Meetings

For international teams, Live Translation can help reduce language barriers during quick discussions or small group meetings. By offering instant comprehension, it allows participants to focus on ideas instead of struggling with miscommunication.

Key Limitations of AirPods Live Translation

While Apple’s live translation feature is a major step forward, it comes with some clear limitations that users should keep in mind.

Limited Language Coverage

At launch, Live Translation supports only a handful of languages. This falls short of global needs, especially for travelers and professionals who require broader coverage.

Dependency on Apple Ecosystem

The feature requires both AirPods and an Apple Intelligence enabled iPhone running iOS 26. This setup limits accessibility for users who don’t already own Apple’s latest devices, effectively tying the feature to the Apple ecosystem.

Latency and Internet Dependency

Real-time translation still depends on connectivity and processing speed. Any lag can disrupt the natural flow of conversation, particularly in fast-paced exchanges.

Best Alternatives to AirPods Live Translation

Although AirPods Live Translation is great for casual use, dedicated platforms are still essential in creative and cultural contexts. The following tools are designed to go beyond day-to-day conversations, offering the accuracy, stability, and workflows needed for larger projects.

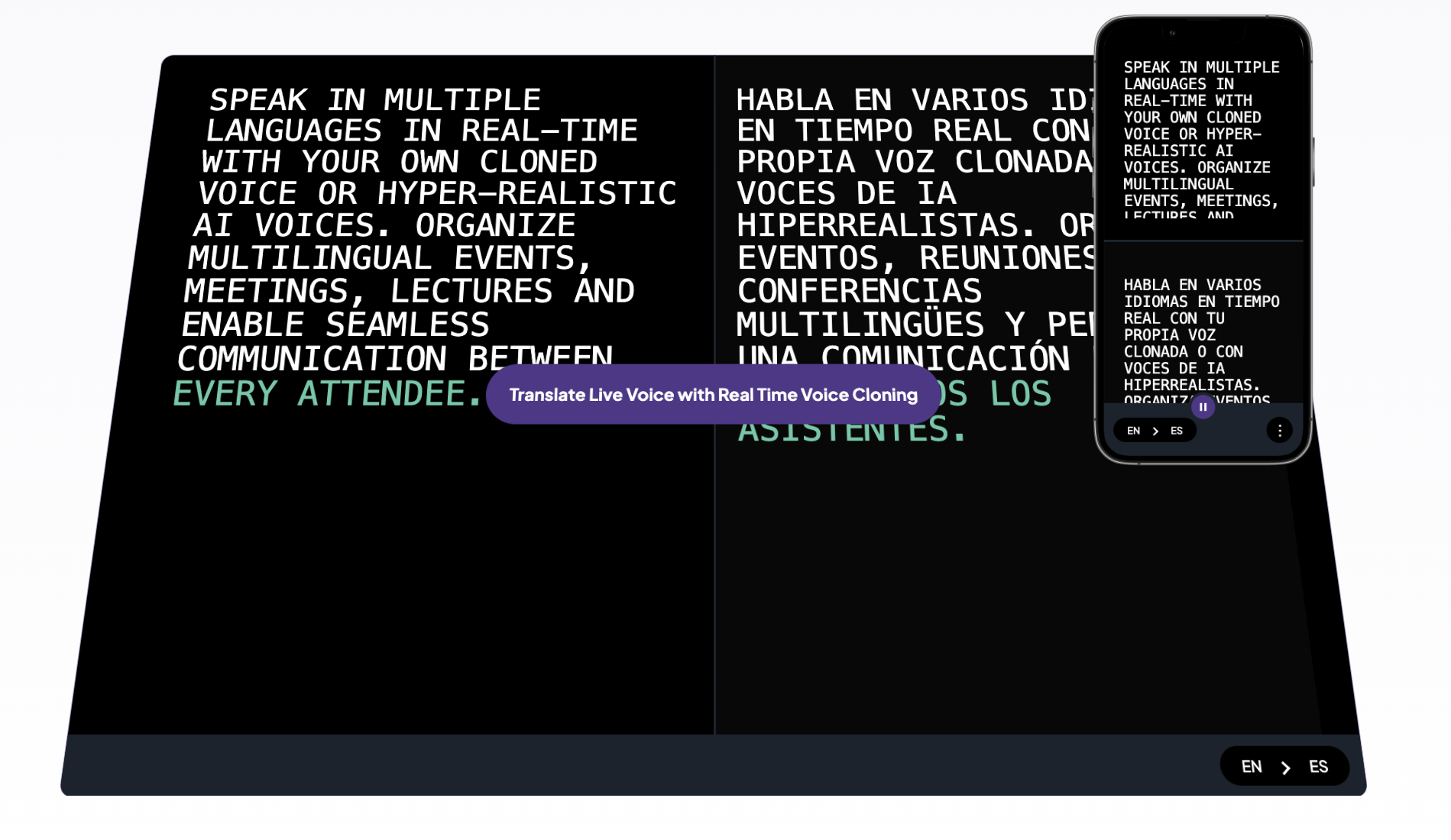

Maestra

Maestra brings live voice translation to a professional level, making it a powerful solution for multilingual meetings, events, and global collaborations. The tool captures speech, translates it into a person's preferred language in real time, and deliver it back as natural-sounding voice while also providing captions.

Standout features of Maestra include:

- Support for 125+ languages, covering a wide range of global communication needs

- Multilingual sessions, allowing each participant to select and listen in their own preferred language

- Voice cloning that preserves the speaker’s natural tone and style in different languages

- Easy session sharing through a QR code or link, making it simple for participants to join instantly

- Save and repurpose conversations, turning them into transcripts, subtitles, or dubbed content for future use

Pricing: Real-time voice translation starts at $79/month. See more on pricing.

DeepL Voice

DeepL Voice brings the company’s renowned translation quality into real-time conversations, making it a powerful tool for teams and professionals working across languages. Built on the same AI models that power DeepL Translator, the voice solution is designed to capture nuance and tone while enabling smoother multilingual communication during calls, meetings, or live interactions.

Standout features of DeepL Voice include:

- Real-time speech translation that delivers natural, context-aware results

- Support for 30+ languages, with a strong emphasis on high-quality European coverage

- Clear, natural-sounding output that preserves tone and intent rather than just literal meaning

- Integration with productivity tools and workflows for seamless team communication

- Secure, privacy-focused infrastructure that keeps sensitive conversations protected

Pricing: DeepL does not provide a standard public price for its voice translator. Instead, it offers custom, quote-based pricing for businesses and enterprises based on their specific usage, volume, and needs.

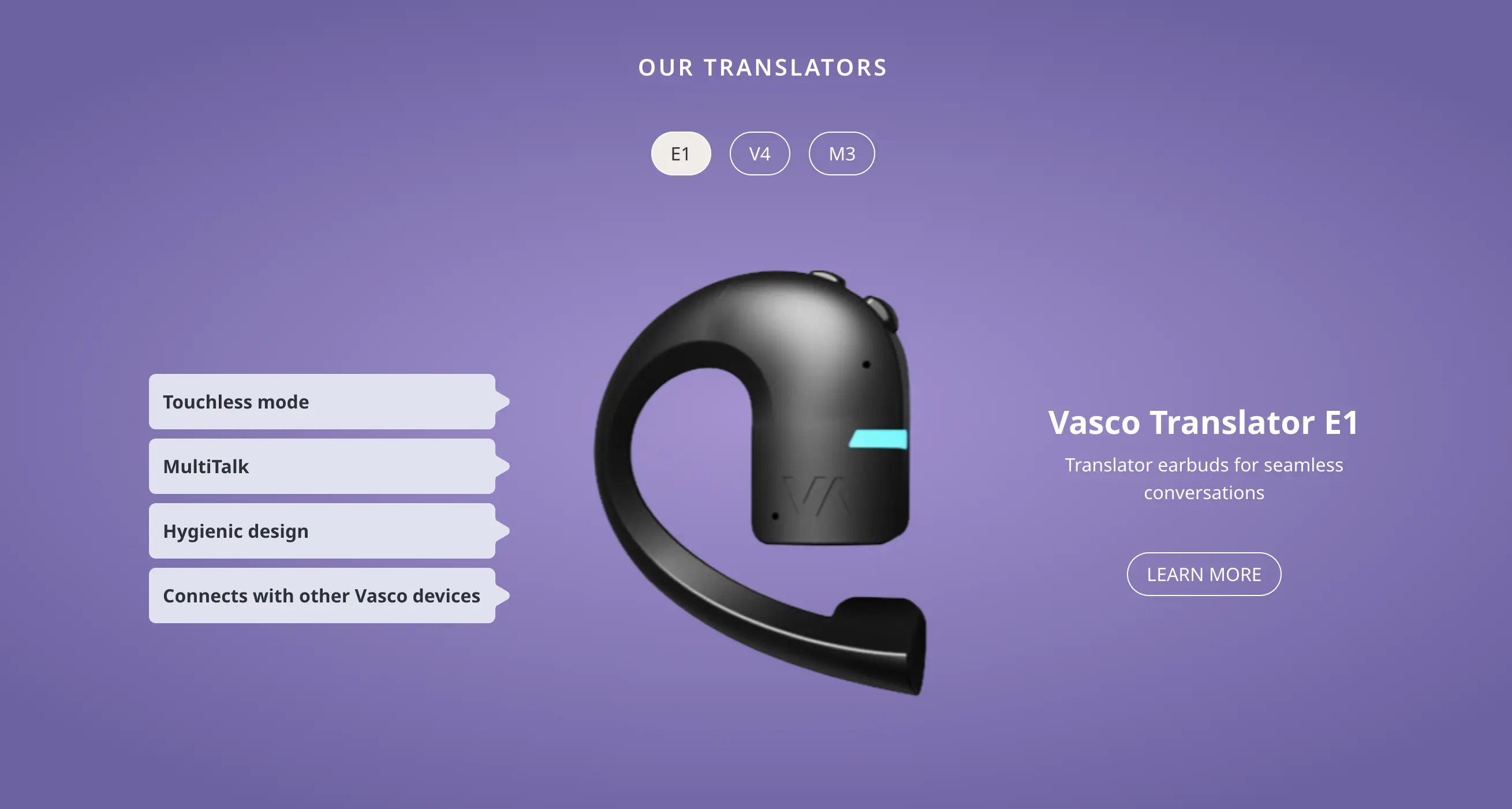

Vasco Translator

Vasco focuses on real-time voice translation that’s accessible and practical for travelers, educators, and professionals on the go. Its Translator E1 earbuds, combined with the Vasco app, make it easy to hold natural conversations across languages without the need for additional subscriptions.

Standout features of Vasco Translator include:

- Support for 50+ languages, covering a wide range of travel and professional needs

- Real-time speech translation with clear voice playback in both directions

- Loudspeaker and earbuds modes, suitable for both group settings and private one-on-one exchanges

- Comes with an in-built SIM card that allows connection to the Internet around the world

- No recurring subscription fees, making it cost-effective compared to some alternatives

Pricing: Vasco Translator E1 is $389. See more on pricing.

To sum up, if you're looking for:

🎤 A professional-grade platform for multilingual meetings and events, you can choose Maestra.

🏢 Context-aware translations for work and enterprise needs, you can go with DeepL Voice.

✈️ A simple, portable device for everyday conversations and travel, you can pick Vasco Translator.

🎧 A casual, consumer-friendly option for everyday conversations, you can use AirPods Live Translation.

The Future of AI Live Translation: What's Next Beyond Apple AirPods

Apple’s introduction of Live Translation is an exciting step, but it also raises questions about where the technology is headed.

Will Apple extend its capabilities?

Right now, Live Translation is limited in both language coverage and ecosystem support. Over time, Apple may add dozens of new languages, refine accuracy through Apple Intelligence, and reduce latency, making the feature more competitive with professional platforms.

Even with future improvements, dedicated platforms will remain critical for creators, educators, and businesses. Tools like Maestra, DeepL, and Vasco are built for large-scale projects, professional accuracy, and accessibility features that go beyond the casual, one-on-one use case that AirPods serve best.

Emerging Technologies in Live Translation

The next generation of live translation tools will likely build on Apple’s momentum while pushing far beyond what’s possible today. Some promising developments include:

- Hyper-personalized translation models, fine-tuned to an individual’s speaking style, accent, and vocabulary for more natural, customized communication

- Cross-lingual sentiment preservation, ensuring that tone, nuance, and emotional intent carry over during translation, not just the literal meaning

- Holographic interpreters in AR/VR, where avatars provide live translated speech and captions inside immersive environments

- Brain-computer interface (BCI) translation research, exploring direct decoding of neural signals into translated speech or text for faster, hands-free communication

- Real-time multi-speaker differentiation, where AI separates and translates overlapping voices in group conversations without losing clarity

- Cultural adaptation engines, capable of reshaping idioms, metaphors, and humor so translations resonate authentically across regions

- Quantum-enhanced language processing, leveraging quantum computing to dramatically reduce latency and improve accuracy in highly complex translation tasks

These advancements hint at a future where translation isn’t just about words; it’s about preserving human connection across every cultural and linguistic barrier.

Conclusion

Today’s world is more interconnected than at any point in history, and language often stands as the final barrier. Whether it’s negotiating with an international partner or simply asking for directions on a trip abroad, the ability to understand and be understood instantly can transform the way we connect with others.

As Apple pushes Live Translation into the mainstream with AirPods, it signals a future where breaking down language barriers could become as effortless as putting on a pair of earbuds. While the feature is still in its early stages (with limited language options and reliance on Apple’s ecosystem), it marks a meaningful step toward a world where seamless, real-time communication is the norm.

Frequently Asked Questions

How can I get Live Translation enabled on my AirPods?

To get Live Translation enabled on your AirPods, you’ll need a compatible model such as AirPods Pro 3, AirPods Pro 2, or AirPods 4 with Active Noise Cancellation. Make sure your iPhone is running iOS 26 with Apple Intelligence activated, since translations are processed on the phone. Once everything is set up, you can activate Live Translation by double-tapping the AirPods stems.

Will Live Translation come to AirPods Pro 2?

Yes. In addition to launching on AirPods Pro 3, Apple has confirmed that Live Translation will also be available on AirPods Pro 2 and AirPods 4 with Active Noise Cancellation. Remember that an iPhone running iOS 26 with Apple Intelligence enabled is required.

Can AirPods Live Translation work offline?

Currently, Live Translation relies on Apple Intelligence and connectivity for processing. While some on-device features may reduce latency, a stable internet connection is generally required for accurate, real-time translations.

Do both people need AirPods for Live Translation to work?

No. If only one person is wearing compatible AirPods, the translation still works. Your iPhone displays your words instantly in the other person’s language, and it can also read them aloud if needed. When both participants have AirPods, each hears speech directly in their own language for a smoother exchange.

How do Apple AirPods handle background noise during live translation?

When Live Translation is activated, the earbuds temporarily lower surrounding noise using Active Noise Cancellation. This ensures speech is heard more clearly and translations are delivered without distraction.

How does AirPods Live Translation compare to Google Pixel Buds and Samsung Galaxy Buds 3?

Google Pixel Buds use a tap-and-speak method in conjunction with the Google Translate app, where one person speaks into the buds and the other listens to the phone's speaker.

Samsung Galaxy Buds 3's "Interpreter" mode also relies on the paired phone, with the person wearing the buds hearing the translation and the other person speaking into the phone's microphone.

The key differentiator for Apple's feature appears to be a more seamless, two-way conversational experience designed to work directly with the iPhone.