The Complete Guide to Video Dubbing

The internet has erased borders for content. A YouTube video posted in London can find a massive audience in São Paulo. An online course filmed in New York can gain thousands of students in Tokyo. A corporate training video created in Berlin can be rolled out across offices in Dubai, Toronto, and Singapore.

There’s just one problem: language.

While English dominates much of the global online space, over 75% of internet users prefer consuming content in their own language. And even those who speak English fluently engage more deeply when video content is delivered in their native tongue.

This is where AI video dubbing software changes the game.

In the past, dubbing was a high-cost, labor-intensive process involving studios, actors, translators, and engineers. Now, AI voice dubbing tools like Maestra enable creators and businesses to translate, localize, and dub videos in 125+ languages — while preserving tone, pacing, and emotion — in a fraction of the time and cost.

In this ultimate guide, we’ll cover:

- What video dubbing is (and isn’t)

- How AI-powered dubbing works

- Key features of modern dubbing platforms

- Step-by-step guides to dubbing your videos

- Best practices for high-quality multilingual video localization

- Industry-specific applications and case studies

- How to choose the right dubbing tool for your needs

- The future of AI video dubbing

Why AI Video Dubbing Matters in 2025

1. The Rise of Multilingual Video Consumption

Global video stats you can’t ignore:

- 70%+ of YouTube watch time comes from outside a creator’s home country.

- Over 5 billion people watch videos online every day.

- 9 in 10 users prefer content in their native language.

- Video localization increases engagement by up to 80%.

The internet is no longer just global in reach — it’s global in expectation. Audiences now assume content will be available in their own language, whether they’re watching Netflix dramas, YouTube tutorials, TikTok videos, or corporate training modules.

2. Why Dubbing Often Beats Subtitles for Engagement

Subtitles have their place — they’re essential for accessibility and preferred by some audiences. But dubbing delivers an immersive, distraction-free experience:

With subtitles:

- Viewers split attention between text and visuals.

- Fast-paced dialogue is harder to follow.

- Emotional delivery is muted.

With dubbing:

- Viewers focus fully on the visuals.

- Emotional tone, pacing, and nuance are preserved.

- Complex information is easier to digest.

Case in point:

When Netflix began aggressively dubbing its original content into multiple languages, non-English markets exploded. Spanish drama La Casa de Papel (Money Heist) became a global phenomenon — largely thanks to its availability in dubbed English, French, Italian, German, and more.

3. Accessibility, Inclusion, and Compliance

Video dubbing also plays a critical role in making content accessible:

- Dyslexia & reading challenges: Removes the need for reading subtitles.

- Young children: Engages pre-reading audiences with full comprehension.

- Visual impairments: Supports those who can’t rely on reading on-screen text.

In some industries, multilingual content isn’t just nice to have — it’s a regulatory requirement. Accessibility standards like ADA and WCAG, along with regional language laws, make multilingual video localization a compliance necessity.

What Is Video Dubbing?

Video dubbing is the process of replacing the spoken audio track in a video with a new voice track in a different language, synchronized to match the timing and lip movements of the original.

It’s not just “reading a translation” — effective dubbing aims to make the localized video feel like it was originally created in the target language.

Dubbing vs. Voice-over vs. Subtitles

| Feature | AI Dubbing | Voice-over | Subtitles |

| Audio Replacement | Full spoken dialogue | Overlay narration | None |

| Lip Sync | Yes (AI lip-sync dubbing) | No | No |

| Immersion | High | Medium | Medium |

| Accessibility | High | Medium | High |

| Cost (Traditional) | High | Medium | Low |

| Cost (AI) | Low and scalable | Low/Scalable | Low |

The Evolution of Dubbing

- 1930s–50s: Manual translation + in-studio recording for cinema.

- 1960s–2000s: Expansion to TV and international markets, still costly.

- 2010s: Digital workflows improved efficiency but relied on human talent.

- 2020s: AI dubbing democratizes multilingual video localization, enabling small creators to do what only studios could before.

How AI Video Dubbing Works

AI voice dubbing software like Maestra AI automates what used to be a labor-intensive process. Here’s how to typically dub a video:

Step 1: Upload Your Video

Supported formats: MP4, MOV, AVI, WMV, MKV.

Import from platforms like YouTube, Vimeo, Zoom, Dropbox, or Google Drive.

Pro tip: Batch upload for multi-video localization projects.

Step 2: AI Transcription

Speech recognition generates a time-coded transcript.

Modern AI transcription can:

- Separate multiple speakers.

- Handle background noise.

- Adapt to accents and varying speeds.

Step 3: AI Translation

Using advanced neural machine translation, the transcript is converted into the target language. This goes beyond word-for-word replacement:

- Adjusts idioms and expressions.

- Preserves tone and formality level.

- Adapts culturally.

Step 4: Voice Synthesis & Voice Cloning

Choose from hundreds of natural-sounding AI voices or clone your own for authenticity.

Voice cloning benefits:

- Keep your own voice across languages.

- Maintain brand voice consistency.

- Build trust with repeat audiences.

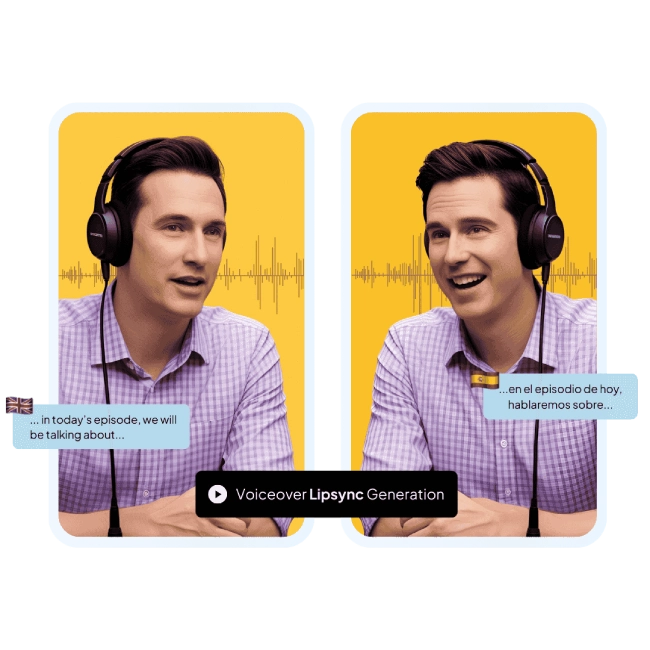

Step 5: AI Lip-Sync Dubbing

The AI adjusts speech pacing, pauses, and inflection to align with the speaker’s mouth movements, minimizing the uncanny “off-sync” effect.

Step 6: Review & Edit

Built-in editors let you:

- Correct translations.

- Adjust timing for sync perfection.

- Swap voices or accents.

Step 7: Export & Publish

Export as:

- Fully dubbed MP4

- Audio track only

- Platform-specific formats (YouTube-ready, LMS-ready, etc.)

What Video Dubbing Isn’t

With all the buzz around AI video dubbing and multilingual video localization, it’s easy to misunderstand what dubbing actually involves — and what it doesn’t. Knowing what dubbing isn’t can help you avoid common mistakes and choose the right approach for your content.

Here’s what video dubbing is not:

❌ Just Adding Subtitles

Subtitles are text translations on screen — they don’t replace the spoken audio. While they’re great for accessibility, they don’t deliver the same immersive, “native” experience as AI lip-sync dubbing.

❌ Word-for-Word Machine Translation

Effective dubbing doesn’t simply swap words between languages. It adapts tone, cultural references, and phrasing so the message makes sense and feels natural in the target language.

❌ A Generic Voice-Over

Voice-over is typically a narration laid on top of the original audio, often without lip-sync. True dubbing replaces the original voice track entirely and aligns it with the speaker’s mouth movements for seamless viewing.

❌ One-Size-Fits-All Localization

Good dubbing isn’t about picking any random voice. Voice cloning for video content can replicate your unique tone and pacing, ensuring the dubbed version feels authentic — not disconnected.

❌ A Quick Fix for Bad Audio

If your source audio is noisy, muffled, or unclear, AI dubbing can’t magically restore lost clarity. Quality dubbing starts with clean, well-recorded audio in the original language.

How Dubbing Differs from Voice-over and Subtitles

While “dubbing” is sometimes used interchangeably with “voice-over,” they are not the same:

| Feature | AI Video Dubbing | Voice-over | Subtitles |

| Audio Replacement | Full replacement of spoken dialogue | Overlaid narration | No audio replacement |

| Lip Sync | Yes (AI lip-sync dubbing) | No | No |

| Immersion Level | High | Medium | Medium |

| Accessibility | High | Medium | High |

| Cost (Traditional) | High | Medium | Low |

| Cost (AI) | Low and scalable | Low/Scalable | Low |

- Voice-over is typically used for documentaries, instructional videos, or news reports — where the original voice may still be faintly audible, and synchronization isn’t a priority.

- Subtitles provide a text translation without touching the original audio — they’re important for accessibility but require viewers to read while watching.

- Dubbing fully replaces the original speech, often aiming to match lip movement and timing, delivering a more immersive, “native” experience.

The Evolution of Video Dubbing

The journey from clunky, mismatched dubs to today’s seamless AI-driven processes spans almost a century.

1930s–1950s: The Birth of Dubbing

Dubbing first emerged as a way to distribute films internationally without re-shooting them.

Scripts were manually translated and adapted to fit timing constraints.

Actors recorded lines in professional studios, guided by directors to match mouth movements as closely as possible.

1960s–2000s: The Golden Age of Traditional Dubbing

Dubbing became standard for distributing foreign-language films and TV shows in markets like Germany, Spain, Italy, and France.

Skilled teams of translators, adaptors, voice actors, and sound engineers worked together — but the process was slow and expensive.

Matching lip movements was often approximate, and some translations took creative liberties to fit timing.

2010s: Digital Workflows

Digital recording and editing tools sped up dubbing production.

Globalization increased the demand for dubbing in advertising, corporate training, and online content.

Costs remained high because professional human voice talent and studios were still required.

2020s: The AI Dubbing Revolution

- AI voice dubbing software like

Maestra made it possible to:

- Automatically transcribe audio.

- Translate it into 100+ languages with context awareness.

- Generate natural-sounding voices without human actors.

- Synchronize timing automatically with AI lip-sync dubbing.

- This democratized multilingual video localization, allowing YouTubers, educators, marketers, and even small nonprofits to create dubbed content without large budgets or teams.

Why AI Is a Game-Changer for Dubbing

Traditional dubbing can cost $1,000–$5,000+ per finished minute for high-quality productions, depending on complexity and number of languages. AI dubbing reduces this cost dramatically while also:

Scaling faster – Dubbing an entire library of videos can take days instead of months.

Maintaining brand voice – Voice cloning for video keeps your original tone and personality.

Enabling real-time dubbing – Live webinars or events can now be streamed in multiple languages simultaneously.

Opening global markets – Smaller creators and companies can afford to localize content for dozens of languages.

The Science Behind Natural-Sounding AI Voices

One of the most remarkable leaps in AI video dubbing isn’t just speed — it’s how real the voices sound. Gone are the days when synthetic speech felt robotic and flat. Modern AI voice dubbing software can create performances that are warm, expressive, and almost indistinguishable from human delivery.

1. Neural Text-to-Speech (TTS) Models

At the heart of today’s voice cloning for video content are deep learning models trained on massive datasets of human speech. Neural TTS doesn’t simply map letters to sounds — it learns how tone, emphasis, and rhythm shift in natural conversation.

2. Prosody and Intonation

Prosody is the melody of speech — the rises, falls, pauses, and pacing that convey meaning beyond words. Advanced multilingual video localization platforms model these patterns to make dubbed voices sound authentic, not monotone.

3. Emotional Modeling

Some AI systems are trained to detect and reproduce emotional cues. This means a marketing video can sound energetic and upbeat, while a documentary voiceover can feel serious and reflective — all in the target language.

4. Voice Cloning Consistency

By training on a few minutes of high-quality voice samples, AI can replicate a speaker’s unique timbre, pitch, and style. The result: every dubbed version sounds like you, even if it’s in Japanese, Arabic, or Spanish.

5. Real-Time Rendering

Recent breakthroughs in processing power mean AI voices can now be generated instantly, opening the door for real-time video dubbing software in live events, webinars, and broadcasts.

Example Workflow: Dubbing a YouTube Tutorial into Spanish and Hindi

Let’s imagine you run a tech YouTube channel and want to expand to Spanish-speaking and Hindi-speaking audiences:

- Upload your original tutorial to Maestra via YouTube link.

- Transcription completes in minutes, labeling your speech segments.

- Translation produces both Spanish and Hindi scripts.

- You choose voice cloning so your own voice is used in both languages.

- Lip-sync AI adjusts timing so it looks natural.

- You quickly edit a few tech terms to ensure they’re correctly localized.

- Export both versions and upload them to separate language-specific playlists.

In under an hour, you’ve expanded your reach to potentially hundreds of millions of new viewers.

Unique Features of Modern AI Dubbing Platforms

Not all AI video dubbing software is created equal. Some tools focus on speed but sacrifice natural voice quality, while others deliver impressive realism but lack scalability for large projects.

When choosing a platform for multilingual video localization, it’s important to look beyond the basic “translate and dub” promise and understand what truly sets modern solutions apart.

Here are the key features you should expect from the best dubbing software:

Multi-Language and Accent Support

Why it matters:

It’s not enough for a dubbing tool to “cover Spanish” — you need to choose which Spanish. The Spanish spoken in Mexico differs from that in Spain, Argentina, or Colombia in vocabulary, pronunciation, and even cultural tone.

What to look for:

- 125+ languages with regional accent options(e.g., Brazilian vs. European Portuguese).

- Ability to switch accents within the same language for different audience segments.

- Neutral accent options for international use.

Example:

A multinational brand launching an ad campaign in Latin America might choose Mexican Spanish for its core market but also produce Colombian-accented versions for targeted regional ads.

Voice Cloning for Video Content

Why it matters:

Audiences build trust and connection with a voice they recognize. Changing that voice in localized content can make it feel less personal or less “official.”

What to look for:

- High-fidelity voice cloning that preserves tone, inflection, and pacing.

- Ability to apply cloned voices across multiple languages.

- Consistency across projects so your “brand voice” never changes unintentionally.

Example:

An e-learning instructor uses voice cloning to keep their exact tone and style when localizing courses into 10 languages — improving student engagement and completion rates.

AI Lip-Sync Dubbing

Why it matters:

Poor lip-syncing is one of the biggest immersion breakers in traditional dubbing. AI lip-sync technology aligns dubbed audio with visible mouth movements, making the experience more seamless for viewers.

What to look for:

- Dynamic speech pacing adjustments to match original lip movement.

- Accurate alignment for languages with different sentence lengths.

- Options to prioritize either exact sync or natural delivery, depending on the content type.

Example:

In a promotional video, lip-sync AI ensures that a spokesperson’s speech looks natural in both French and Japanese versions — avoiding the awkward “off-time” effect.

Real-Time Video Dubbing Software

Why it matters:

For live events — webinars, product launches, international meetings — pre-recorded dubbing isn’t enough. Real-time multilingual streaming lets you broadcast to audiences in different languages simultaneously.

What to look for:

- Low-latency translation and dubbing.

- Multiple language audio channels for the same live stream.

- Integration with platforms like Zoom, YouTube Live, or custom RTMP setups.

Example:

A tech company launches a new product via a live webinar. With real-time dubbing, viewers in Brazil, Germany, and Korea can listen to the presentation in Portuguese, German, and Korean as it happens.

Secure Cloud-Based Editing

Why it matters:

Most dubbing projects involve sensitive content — unreleased product demos, internal training materials, or confidential client videos. Security is non-negotiable.

What to look for:

- End-to-end encryption for all uploads and exports.

- SOC 2 and GDPR compliance for global privacy standards.

- Controlled access for team collaboration.

Example:

A legal training video for multinational staff is processed in a SOC 2-certified platform, ensuring confidentiality and regulatory compliance.

Multi-Platform Export and Sharing

Why it matters:

Once dubbed, videos need to be ready for the platforms where they’ll live — YouTube, TikTok, internal LMS, or corporate intranets.

What to look for:

- Export presets for common platforms.

- Audio-only export for post-production mixing.

- Shareable review links for stakeholder approval.

Example:

A marketing agency exports dubbed versions of a product demo directly in YouTube-optimized format, with an additional audio-only track for use in a podcast.

Batch Processing and Automation

Why it matters:

Scaling multilingual content is impossible if you have to process each video manually. Batch processing allows you to handle multiple videos — and multiple languages — simultaneously.

What to look for:

- Bulk upload and translation.

- Workflow automation with API access for large-scale projects.

- Template-based settings for consistent results across files.

Example:

An online university dubs 200 lecture videos into five languages in a single automated run, reducing production time from months to days.

Built-In Editing and Fine-Tuning Tools

Why it matters:

No AI is perfect. Fine-tuning is essential for high-stakes content.

What to look for:

- Segment-by-segment editing of translations.

- Voice re-selection for specific lines.

- Real-time playback for review.

Example:

A safety training video includes technical terms that AI initially translates too literally; the editor quickly adjusts them in-platform before export.

Collaboration Features

Why it matters:

Many dubbing projects involve multiple stakeholders — marketing teams, translators, brand managers, and technical reviewers.

What to look for:

- Multi-user access with role-based permissions.

- Commenting and feedback features.

- Version history for transparency.

Best Practices for High-Quality AI Video Dubbing

Using AI video dubbing software makes the process dramatically faster and more cost-effective than traditional studio dubbing — but speed shouldn’t come at the cost of quality. Even with the most advanced multilingual video localization platforms, following a set of best video dubbing practices ensures your content sounds natural, authentic, and culturally appropriate.

These recommendations come from a combination of professional dubbing standards, localization principles, and insights from high-volume AI dubbing projects.

Start with High-Quality Source Audio

Why it matters:

AI transcription accuracy is directly tied to the clarity of the source audio. Background noise, music, or poor microphone quality can reduce accuracy and force extra editing.

Action Steps:

- Record with a directional microphone to minimize room echo.

- Avoid overlapping voices unless the dialogue requires it.

- Keep background music low during speaking sections.

Example:

An e-learning provider improved dubbing accuracy from 92% to 98% simply by switching from laptop microphones to entry-level USB condenser mics.

Script for Localization From the Start

Why it matters:

If your original script is full of idioms, cultural references, or overly complex sentences, AI translation will either produce awkward results or require heavy manual editing.

Action Steps:

- Keep sentences concise (10–15 words is ideal).

- Avoid metaphors or region-specific slang unless you plan to localize them.

- Use terminology consistently throughout your script.

Example:

Instead of “That’s a home run for our marketing team,” say “That’s a big success for our marketing team.” The latter is easier to translate naturally into multiple languages.

Choose the Right Voices for the Audience

Why it matters:

Voice selection isn’t just about clarity — it’s about connecting with your audience. A voice that works for a corporate investor presentation may sound too formal for a youth-oriented marketing campaign.

Action Steps:

- Test several voices with your target audience before committing.

- Match tone to content type (warm and friendly for education, authoritative for compliance training).

- Consider regional accents if targeting specific markets.

Example:

A lifestyle YouTuber targeting Latin America switched from a European Spanish voice to a Mexican Spanish voice, resulting in a 25% increase in viewer engagement.

Leverage Voice Cloning for Consistency

Why it matters:

If you regularly appear in your own videos, voice cloning for video content keeps your voice recognizable across all localized versions, preserving brand identity.

Action Steps:

- Record at least 5–10 minutes of clean speech for voice cloning training.

- Review cloned voice output in at least two target languages before scaling.

- Maintain the same cloned voice profile across all projects.

Example:

A SaaS founder cloned their voice for 12 languages. Their product demo videos felt personal and authentic in every region.

Balance Lip Sync with Natural Delivery

Why it matters:

While AI lip-sync dubbing improves immersion, forcing exact lip alignment in every case can sometimes make speech sound rushed or unnatural — especially in languages with longer average word counts.

Action Steps:

- For marketing and film, prioritize lip-sync accuracy.

- For training and e-learning, prioritize pacing and clarity over perfect lip match.

- Use your platform’s “sync tolerance” settings to find the right balance.

Review Every Translation for Accuracy and Tone

Why it matters:

Even the most advanced AI models can mistranslate technical terms, brand-specific language, or culturally sensitive phrases.

Action Steps:

- Have a native speaker review high-priority translations.

- Pay special attention to brand names, product terminology, and compliance language.

- Adjust formal/informal tone according to the market.

Example:

In a health awareness campaign, AI translated “screening” as “display” in some languages — human review caught and fixed this before release.

Customize Timing for Audience Comfort

Why it matters:

Some languages require more syllables to convey the same meaning. Without adjustments, this can result in rushed delivery that’s hard to follow.

Action Steps:

- Stretch or compress timing within lip-sync constraints for readability.

- Split long sentences into multiple segments if necessary.

- Use platform editing tools to manually adjust difficult sections.

Keep Accessibility in Mind

Why it matters:

Dubbing should enhance accessibility, not replace it. Some viewers still prefer or require subtitles alongside dubbed audio.

Action Steps:

- Offer both dubbed audio and translated subtitles (dual localization).

- Follow accessibility standards like WCAG for captioning formats.

- Use color-coded subtitles for multi-speaker clarity in complex videos.

Test on Multiple Devices Before Release

Why it matters:

Audio can sound different on headphones, laptops, and mobile devices. A voice that sounds rich on studio monitors might sound muffled on a phone speaker.

Action Steps:

- Preview dubbed videos on desktop, phone, and TV before publishing.

- Check volume balance between voice and background audio.

- Optimize exports for platform-specific playback (e.g., mobile-first for TikTok).

Track Performance and Iterate

Why it matters:

Localization is an ongoing process. Measuring viewer engagement, watch time, and drop-off points in each language version can guide improvements.

Action Steps:

- Use analytics to compare performance between languages.

- Test different voices or tones for underperforming regions.

- Update translations when terminology or branding changes.

Industry-Specific Applications & Case Studies for AI Video Dubbing

One of the biggest strengths of AI video dubbing software is its versatility. Whether you’re a solo YouTuber, a global enterprise, or a nonprofit, the ability to translate and dub videos into multiple languages can unlock new audiences, revenue streams, and impact.

Let’s explore how multilingual video localization works across industries — with examples of real-world benefits.

YouTubers & Independent Creators

The challenge:

Many creators build loyal audiences locally but struggle to reach viewers in regions where their primary language isn’t spoken. Subtitles help, but they don’t always hold attention — especially for entertainment and storytelling content.

How AI dubbing helps:

- Dub YouTube videos into multiple languages without reshooting.

- Keep your original personality with voice cloning for video content.

- Target specific regions with localized voices and accents.

Case Study:

A gaming YouTuber with 500k English-speaking subscribers used Maestra to dub popular “Let’s Play” episodes into Spanish and Portuguese. Within 4 months:

- Subscriber base grew by 38%.

- Average watch time in Latin America increased from 3 minutes to over 9 minutes.

- Sponsorship revenue expanded to regional gaming brands.

Key takeaway: Entertainment content benefits heavily from AI lip-sync dubbing, as it preserves emotional delivery and keeps immersion high.

E-Learning & Education

The challenge:

Online education platforms face demand from global students but can’t always afford to re-record courses in multiple languages.

How AI dubbing helps:

- Localize lectures and tutorials without re-recording.

- Retain instructor credibility with voice cloning.

- Support accessibility by pairing dubbed audio with translated subtitles.

Case Study:

A MOOC (Massive Open Online Course) provider localized its top 20 courses into French, Hindi, and Arabic. The result:

- Enrollment from non-English-speaking countries doubled in 6 months.

- Course completion rates increased by 22% in localized versions.

- Student feedback praised “natural-sounding instructors” in their native languages.

Key takeaway: Educational content sees measurable gains in completion rates when learners can process information in their primary language.

Corporate Training & Internal Communications

The challenge:

Global companies need consistent training content for employees in multiple regions, but creating region-specific versions is slow and costly.

How AI dubbing helps:

- Roll out compliance training in dozens of languages simultaneously.

- Ensure consistent messaging with brand-approved voices.

- Speed up onboarding by localizing internal communication videos.

Case Study: A logistics company with operations in 15 countries used Maestra to dub health & safety training into 12 languages.

The result:

- Production timelines dropped from 3 months to just 2 weeks.

- Compliance test pass rates improved by 30% in non-English-speaking regions.

- Workplace safety incidents decreased due to clearer, native-language training.

- Employee feedback highlighted greater confidence and understanding when learning in their own language.

Key takeaway:

AI dubbing doesn’t just save time and money — it improves comprehension, retention, and overall workplace safety in global organizations.

Marketing & Advertising

The challenge:

Launching marketing campaigns in multiple languages often means separate shoots, multiple voice actors, and long production timelines — slowing down time-to-market.

How AI dubbing helps:

- Simultaneous multilingual ad launches without extra filming.

- Match voices and tones to cultural expectations in each market.

- AI lip-sync dubbing ensures the spokesperson’s mouth movements align, preserving authenticity.

Case Study:

A tech brand launching a wearable device used Maestra to dub its 90-second product ad into 10 languages for social media.

- Campaign launched in all target markets on the same day.

- Achieved 21% higher engagement compared to previous subtitled campaigns.

- Saved over $40,000 compared to hiring regional voice actors and studios.

Key takeaway:

Speed and simultaneous delivery can be game-changers for time-sensitive campaigns like product launches.

NGOs & Public Sector

The challenge:

Nonprofits and public institutions often need to share critical information across diverse language communities — sometimes during emergencies — but budgets and timelines are tight.

How AI dubbing helps:

- Real-time video dubbing software for live broadcasts in multiple languages.

- Quickly localize public health announcements, educational PSAs, and safety messages.

- Maintain trust by using consistent voice delivery for official communications.

Case Study:

An international health NGO used Maestra to dub a COVID-19 safety series into 22 languages in under a week.

- Video reach tripled compared to English-only versions.

- Engagement among rural language communities increased significantly.

- Reduced misinformation by ensuring accurate, culturally sensitive messaging.

Key takeaway:

For NGOs, the ability to quickly and affordably localize video content can directly impact public safety and awareness.

Entertainment & Media

The challenge:

Film, TV, and streaming content thrive on emotional connection — but bad dubbing can ruin immersion and lead to negative audience reviews.

How AI dubbing helps:

- Preserve acting performance with expressive AI voices or voice cloningfrom original actors.

- AI lip-sync dubbing for seamless viewing experiences.

- Localize entire series or film libraries at a fraction of traditional dubbing cost.

Case Study:

A mid-sized streaming service used AI dubbing to offer regional language versions of indie films that otherwise wouldn’t have justified the cost of human dubbing.

- Subscription sign-ups in target regions rose by 17% in three months.

- Viewer satisfaction ratings for dubbed content were within 3% of subtitled originals.

Key takeaway:

AI opens the door for smaller players to compete with global entertainment giants by offering multilingual libraries without massive budgets.

Dub Videos with AI

Maestra's video dubber is one of the best solutions in the industry when it comes to video dubbing. With an incredible library of AI voices in many languages, it has advanced features such as:

- Accent and emotion options

- Voice cloning and lip sync

- Real-time dubbing with voice cloning

- Advanced yet intuitive editing

Try Maestra's video dubber today, for free!

FAQ

What is AI video dubbing?

AI video dubbing is the process of replacing the spoken audio in a video with a translated voice track generated by artificial intelligence. Unlike simple voice-over, AI video dubbing software can synchronize speech with lip movements, preserve tone and pacing, and even replicate the original speaker’s voice through voice cloning.

How accurate is AI video dubbing compared to traditional dubbing?

With high-quality source audio, modern AI dubbing tools can achieve 95%+ accuracy in transcription and translation. While professional human dubbing may still edge out AI in nuanced acting or comedy, AI dubbing delivers speed, scalability, and cost-efficiency that traditional methods can’t match, especially for corporate, educational, and marketing content.

Can AI dubbing make my video sound natural?

Yes, AI-generated voices have advanced significantly, with neural TTS (text-to-speech) capable of natural intonation, emphasis, and pacing. With AI lip-sync dubbing and expressive voice options, dubbed audio can sound smooth, conversational, and immersive.

What languages are supported?

Platforms like Maestra support 125+ languages and accents, including regional variations like Brazilian Portuguese, Mexican Spanish, and Canadian French. This allows you to target specific markets more effectively rather than relying on one “global” version of a language.

What is voice cloning, and why should I use it?

Voice cloning for video content is the process of training an AI model on your voice recordings so it can replicate your tone, pitch, and style in other languages. This helps maintain brand identity and personal connection with your audience across multilingual versions.

How long does AI dubbing take?

It depends on video length and the number of target languages, but a 10-minute video can often be fully dubbed into multiple languages in under an hour. This is a fraction of the days or weeks traditional dubbing requires.

Can I dub live events in real time?

Yes — real-time video dubbing software can translate and stream your event audio in multiple languages instantly. This is ideal for webinars, conferences, product launches, and global company meetings.

Will AI dubbing replace human dubbing entirely?

Not necessarily. While AI is perfect for scaling corporate training, marketing videos, or YouTube content, certain high-budget film and TV productions may still prefer human actors for complex emotional delivery. The future likely involves hybrid workflows that combine AI efficiency with human creative oversight.

Is AI video dubbing secure?

Yes, with the right platform. Maestra uses end-to-end encryption and complies with SOC 2 and GDPR standards to keep your content and data secure during upload, processing, and storage.

Can I add subtitles to a dubbed video?

Absolutely. Many creators use dual localization, combining dubbed audio with translated subtitles. This improves accessibility and gives viewers the option to both hear and read in their language.

What formats can I export in?

You can typically export:

Fully dubbed MP4 video with integrated audio.

Audio-only files for mixing in external software.

Platform-optimized versions for YouTube, TikTok, LMS systems, or internal networks.

How much does AI dubbing cost?

Pricing varies by platform, but AI dubbing is typically 10–20x cheaper than traditional dubbing. Costs are usually based on video length and number of languages.

Can AI dubbing handle technical or industry-specific terms?

Yes, but for best results, it’s wise to review translations or create a custom glossary in your platform. This ensures accurate handling of specialized vocabulary.